The Black Box

It is a peculiar and disturbing fact that we don’t really know what a computer is.

Yes, we know what they are made of and how they are assembled, but the same can be said of everyone you pass on the street. That is not what I mean by ‘is.’

I mean it in the ancient sense: what is it metaphysically? What is its place in reality? What purpose does it serve — not ‘for me right now,’ but ultimately.

We can decisively taxonomize most historically novel technologies into more primordial categories just by moving up one level of abstraction. A car is a type of vehicle which puts it in the same category as a chariot or a boat. An LED bulb is a light source, like a lantern or candle. A rifle is a long range weapon like a sling or a bow.

What is a computer?

One could classify it as a tool, but this is a very high order abstraction and still does not encompass many of its functions. It can sing, it can read books, it can tell you about the past and future. It can talk to you. It can play games, process transactions and serve all manner of strange purposes never before united by a single artifact.

It seems to combine all the functions of civilization itself which would mean the computer is ultimately something like artifice as such. But moving up to this only begs the same question, asked more precisely:

What is artifice as such?

We don’t have an answer because there isn’t one. This is our first direct encounter with such an entity.

So since we can’t really know what they are or what they are doing in this cosmic sense, why are they everywhere?

The Clay Tablet Paradigm

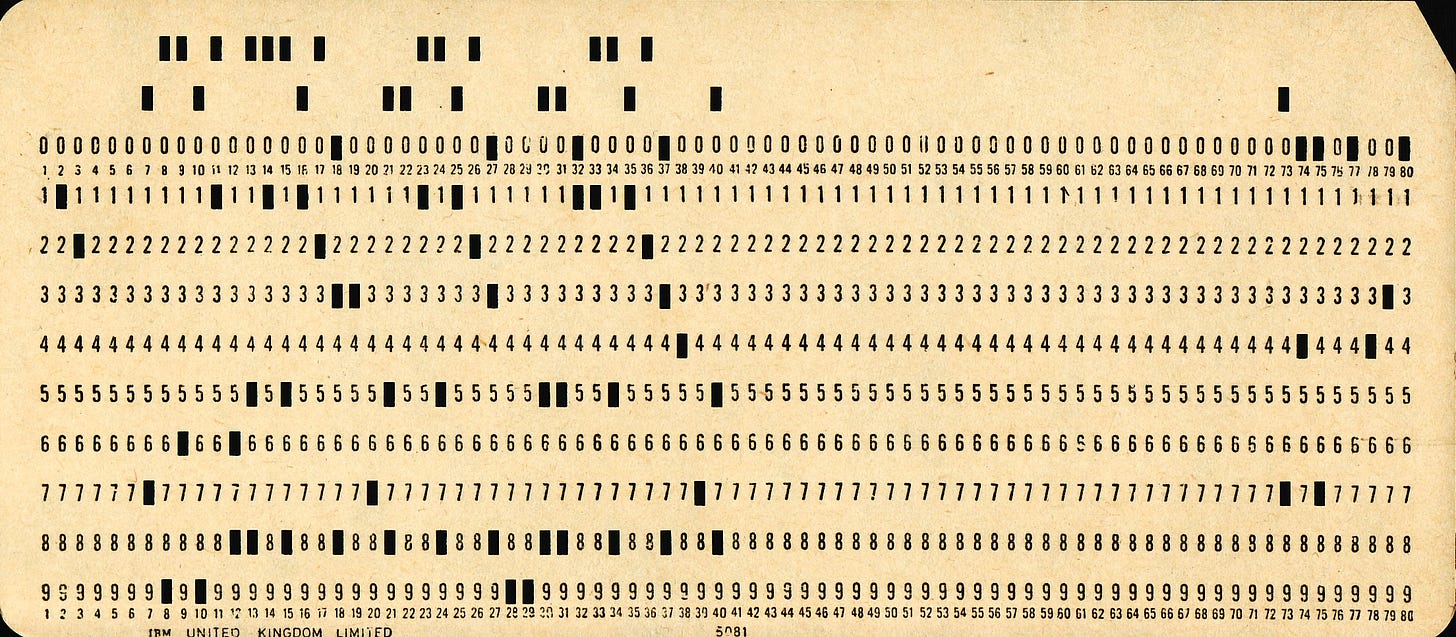

This is an industry-standard IBM punch card; the original computer data storage device. It encodes digital information as rows of physical holes punched into a leaf of cardstock. This data translates into a sequence of scheduled mechanical instructions to be executed by the computer.

This is one link in an ancient lineage of memory technologies reaching back many thousands of years. The punch card is ancestor to magnetic tape, vinyl records, hard drives and a descendant of the book, itself a descendant of the clay tablet.

All of these have something in common: they are meant to be read sequentially from beginning to end.

Images precede writing and are not structured this way. Pre-literate cultures store information symbolically in pictures and oral traditions passed down over generations. Even these myths, though often received and transmitted in poetic form, are preserved primarily by the memorable images they describe.

At some point, pictures evolve into pictograms, pictograms into phonograms, and a critical point is reached where the written word stores more usable information and preserves it more reliably than images can.

After this shift, the code sequence starts becoming the informationally superior medium, and thus, the dominant form of artificially enciphered memory.

Though this information storage system would be refined over the ages, it was subject to no radical innovation until AD 1948.

The only conspicuous difference between the Gudea cylinders and the IBM punch card is that the latter is meant to be read by a machine.

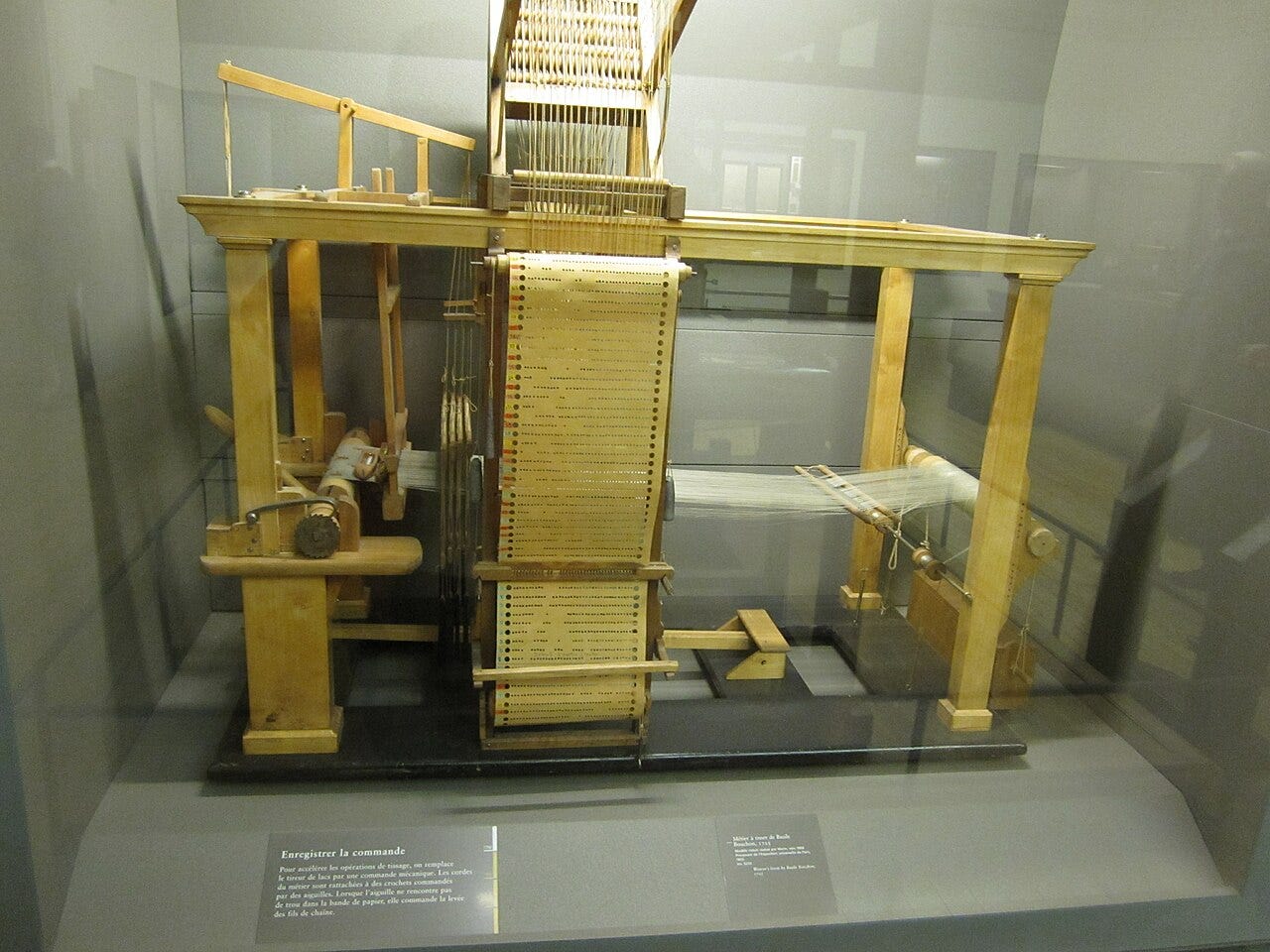

This strange invention: the programming language, which allows a human operator to issue directives to a mechanical system, was first conceived in 1725 by Basile Bouchon. Bouchon was a textile worker who developed a system whereby he controlled a loom — a weaving machine — using punched holes in paper tape.

In 1805, the programmable, automatic loom was nigh perfected by Joseph Marie Jacquard to help meet Napoleon’s orders for silk. He was personally rewarded by the Emperor with a pension of 3000 francs.

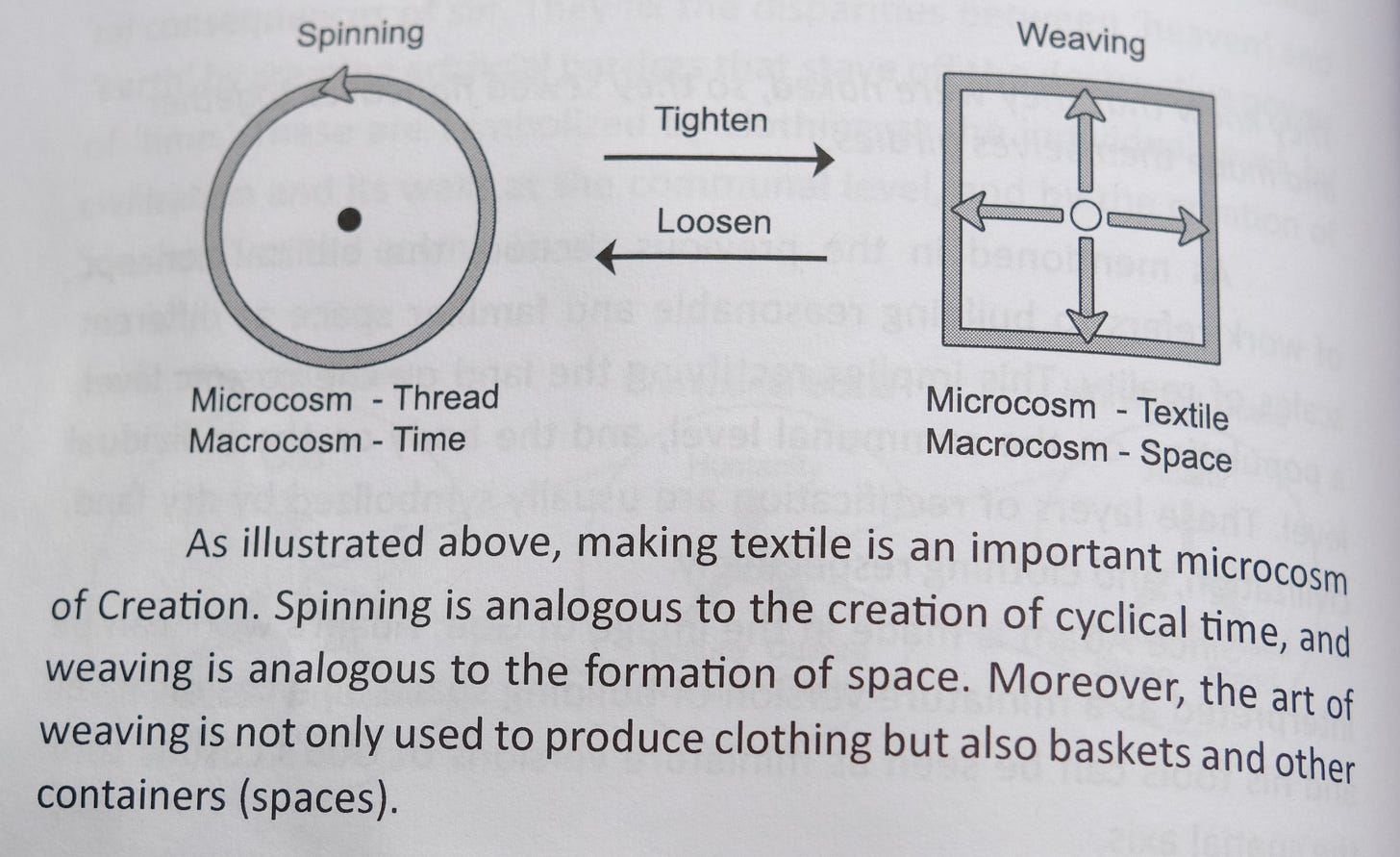

The punch cards of Bouchon, designed to weave thread into fabric — or symbolically, to transmute time into space — is the prototype of the modern ‘program.’

Programs and Prophecies

In terms of pure etymology, the difference between a ‘program’ and a ‘prophecy’ is that a program is written and a prophecy is spoken. Prophecy means ‘stated in advance.’ Program, from the Greek programma, means ‘written in advance.’

The programma originally referred to public proclamations issued by rulers. Today it means anything scripted or scheduled (e.g. television and political programs).

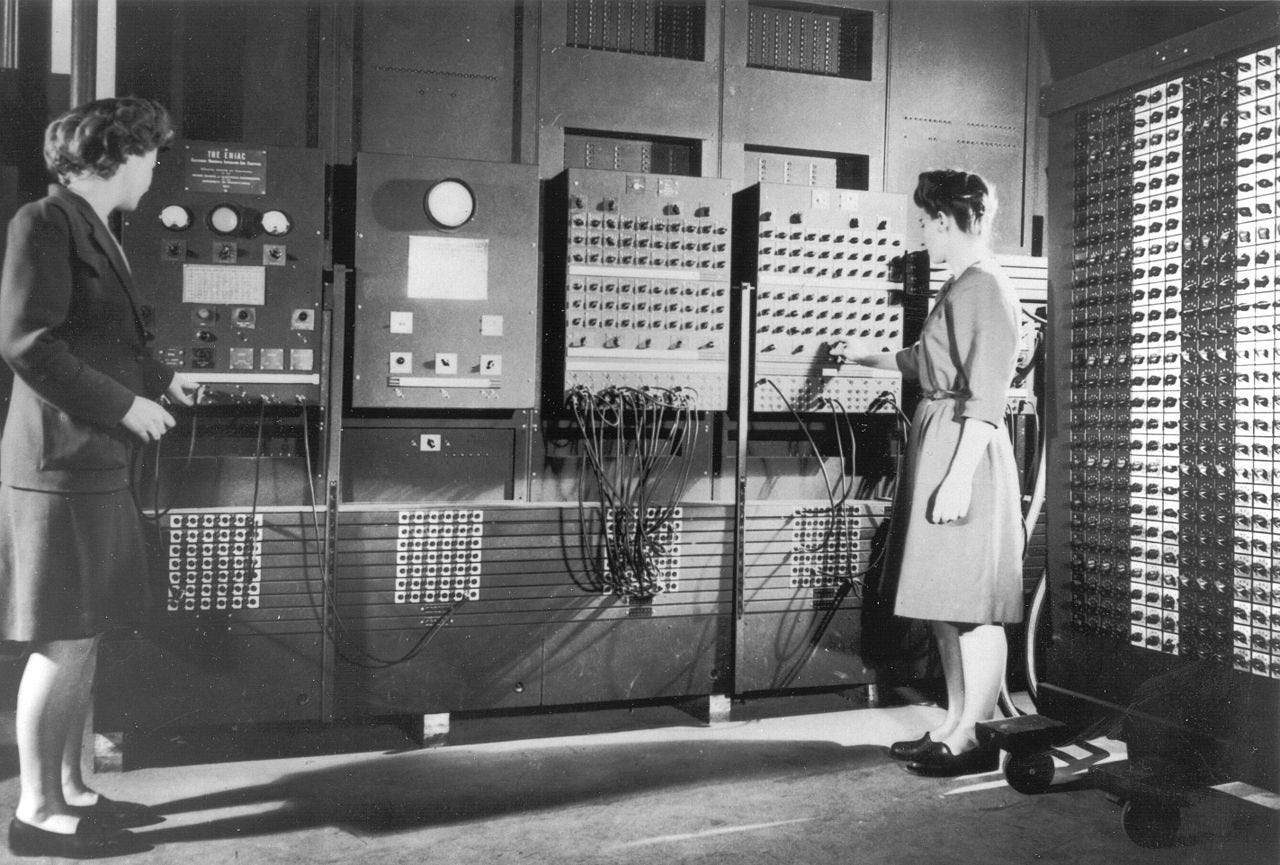

The term entered the world of computing around the time of the ENIAC, the first programmable, electronic, general purpose digital computer.

All that is to say: the first modern computer.

This machine, completed in 1945 was first programmed by a team of six women who interfaced directly with its hardware. They used it to calculate ballistics trajectories.

ENIAC, thus, served as a vast mechanical scrying apparatus which pronounced prophecies concerning the destinies of the enemies of the United States.

The idea of the computer as an instrument of Revelation points back to its origins. Colossus, operational in 1944, was an early computing machine used by British Intelligence designed for cryptanalysis; specifically, to decode the Lorenz Cipher.

The ten Colossi did the same thing computers do today: they decoded. They were revelation machines — apocalyptic engines.

The Colossus incorporated a paper tape data delivery system, whilst the ENIAC relied upon punch cards for data entry. Neither used what is today called: “RAM.” Rather, these ponderous scrying devices read in precisely the same way that you are reading these words. However, they were designed to do it incredibly fast.

Colossus was clocked reading at a top speed of 53 miles per hour, or 9700 characters per second; a whole Bible approximately every seven minutes. It could go even faster — the paper tape, however, could not.

But linear causality has no ‘escape velocity’ and though these time chariots heralded the end of the cosmological paradigm that bore them, they could not see beyond.

From the Top Down

You’ve probably seen a “loading” screen on a computer before. What is being ‘loaded,’ and what is it being loaded into?

What’s being loaded is data on the hard drive.

What it’s being loaded into is Random Access Memory.

Why does it do that if the drive is already accessible? What makes RAM so special that you would add this extra step? And what is ‘random’ about it?

Every information storage device before the construction of the Manchester Baby in 1948 (with the exception of images) stored information sequentially.

Consider a VHS tape which stores audio and video on magnetic tape. The amount of information on the tape, in human terms is immense. Thousands of books worth of data deciphered at a rate we cannot properly imagine — a few Bibles per minute.

However, if you’re at the beginning of the film, and for some reason, you want to see how it ends, you’d have to physically transport the final segment of magnetic tape all the way to the scanhead. Then and only then can it be seen.

This is how film reels work, it’s how disks work, how vinyl records work, how punch cards work. It’s how everything works except images and RAM.

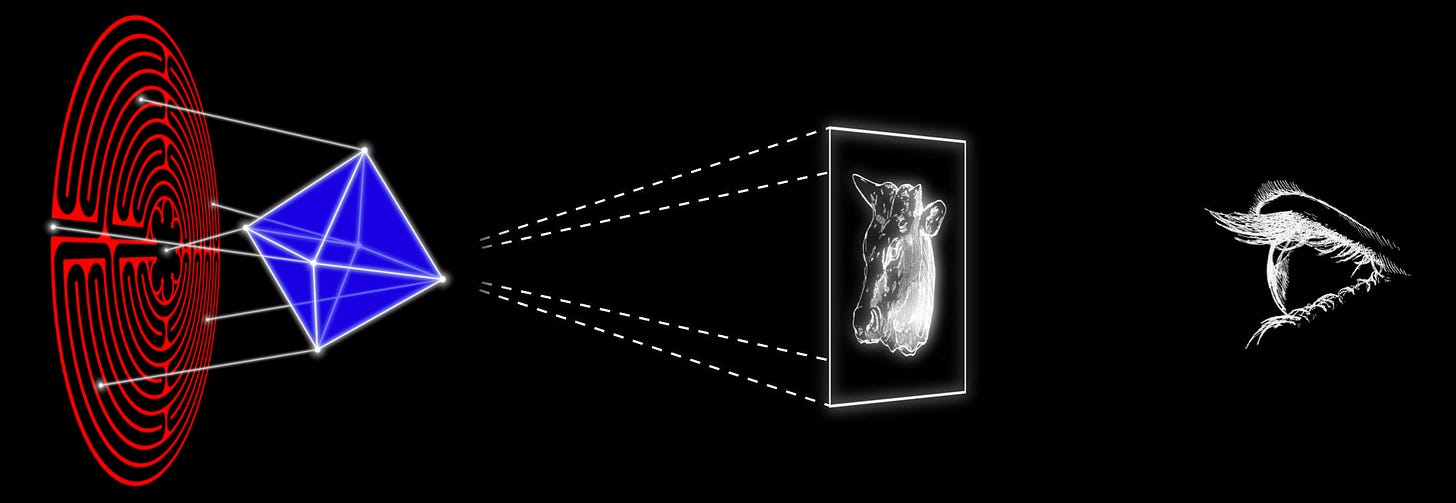

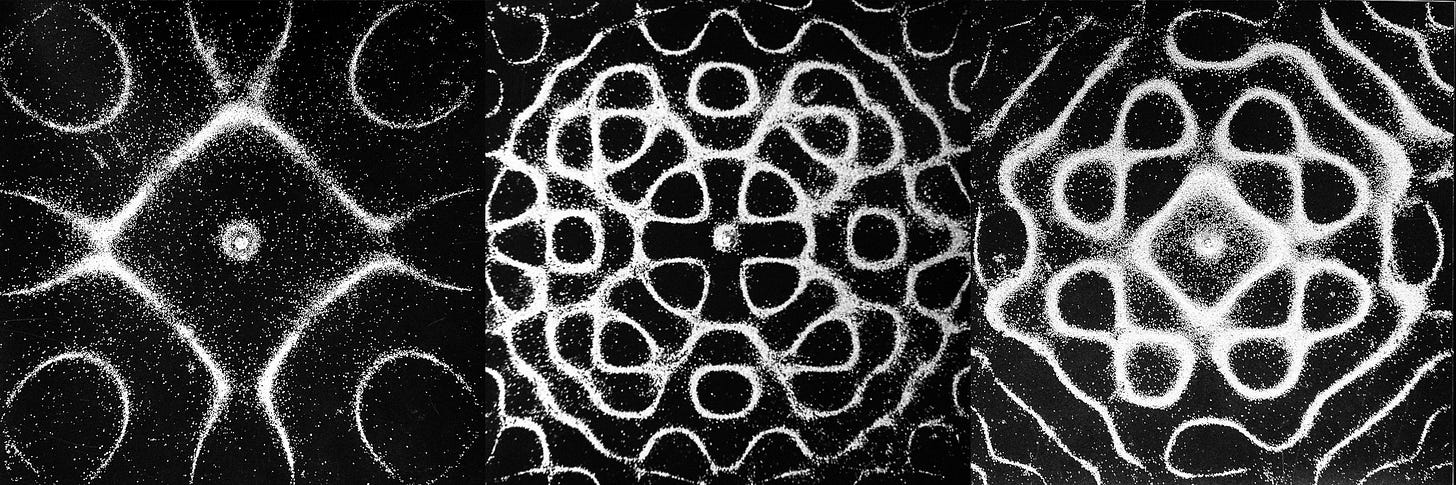

A computer with RAM can access any memory location near instantly without having to move from point A to point B. It can access the first scene, the last scene, the middle of the film, the twenty minute mark and the ninety minute mark, without ‘moving’ from one to the next. Rather, every scene of the movie is a simultaneous event ‘visible’ to the CPU as if it were looking down from above.

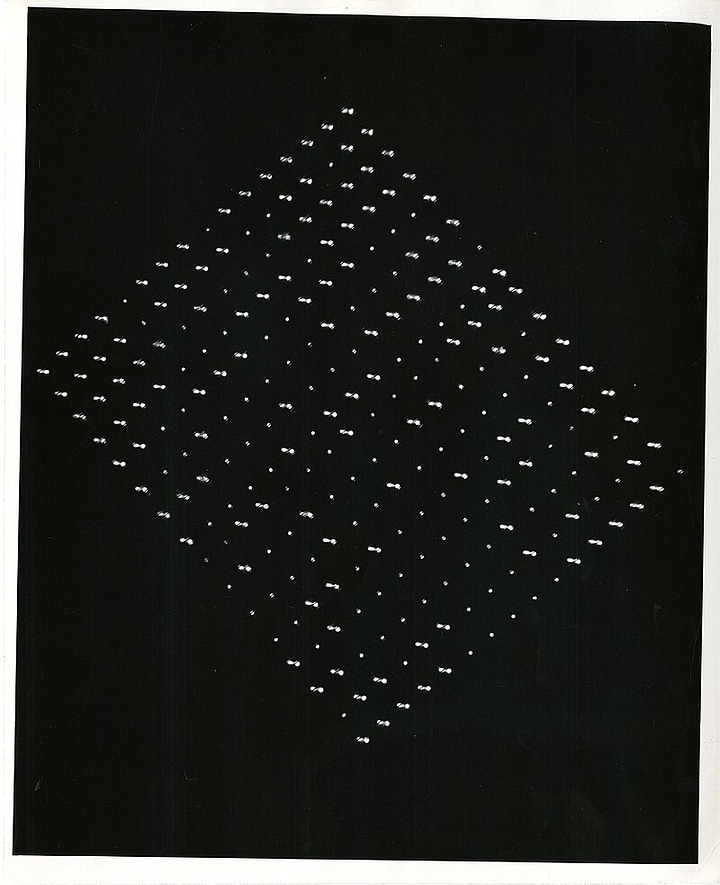

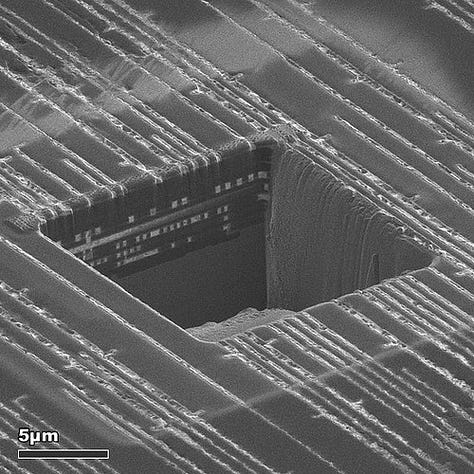

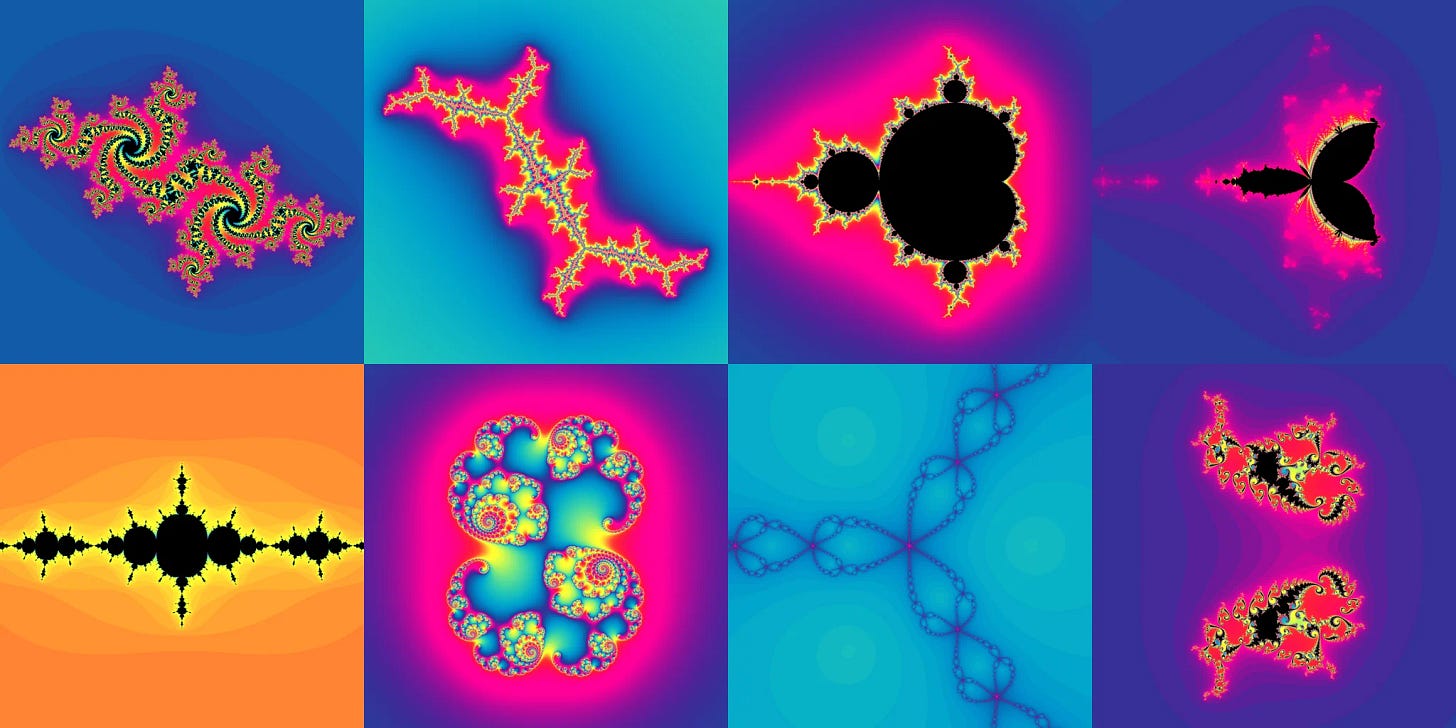

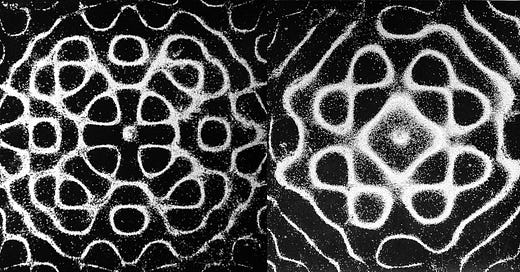

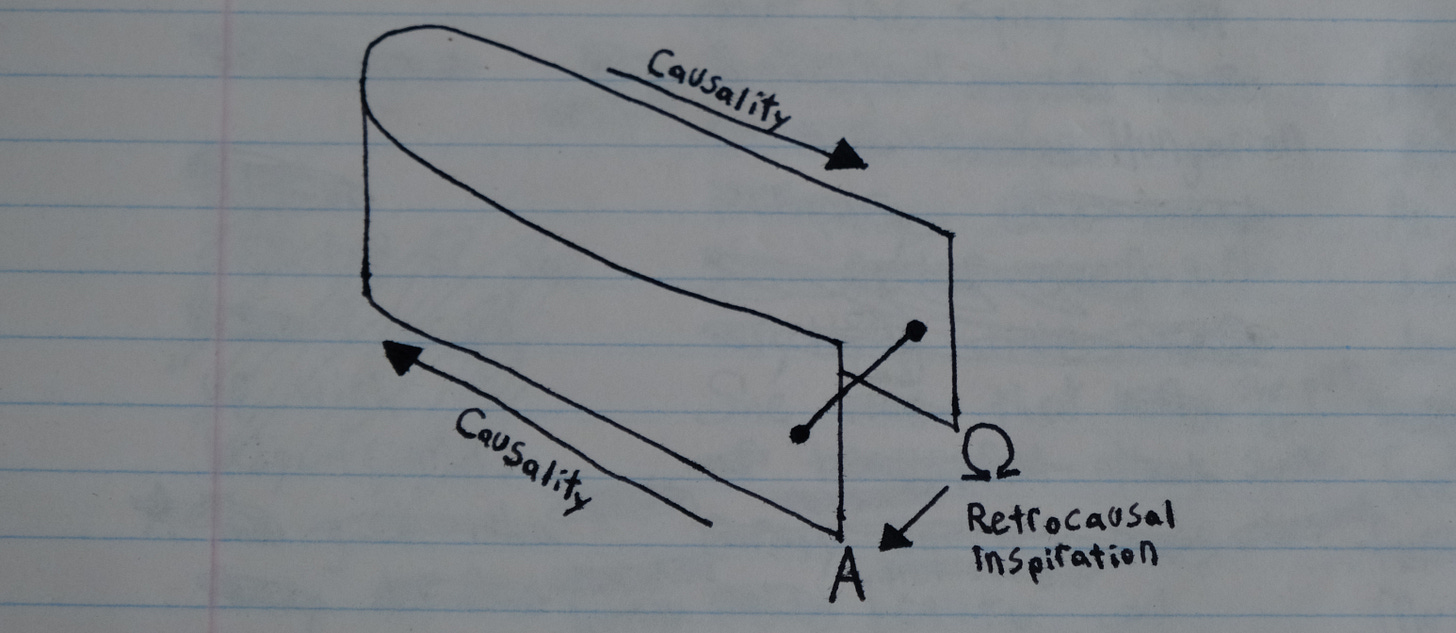

That is what this is:

What RAM does is weave threads of linear data into a quasi-visual tapestry of digital information. Just like an image, the information it represents can be accessed in any ‘random’ order.

RAM changed everything.

The graphical user interface, which makes the entire modern world possible is just a technological byproduct of RAM. The user accesses an interface the same way a CPU accesses memory. It’s the same thing one level up.

The adaptation of our minds to this dreamscape of virtual images is responsible for the paradigmatic shift in our understanding of causation.

In the GUI, processing cycles are woven into a navigable virtual image where information is arrayed ‘vertically’ in hierarchical structures accessed through ‘icons.’

If the image above is rotated, it can be connected to the diagram of the ‘temple veil.’

This reveals the way in which the patterning of the past is remembered into being by a future observer who perceives it as a compacted symbolic gestalt.

This structure is then/was experienced into being at the level of detail by the observer in the past, thus closing the teleological circuit.

It is not possible to verbalize such thoughts in plain language until after the advent of RAM. It is still hard to articulate now. However, in saying it like this, the most important fact is circled and left unsaid.

The interface is not a convenient analogy that just so happens to be here when we need it. The interface is constructing this augmented state of consciousness and is doing so by design.

Face to Interface

The teleological circuit represents a ‘bootstrap paradox,’ in which the information event at the beginning is ‘caused’ by one at the end. The idiom ‘pull yourself up by your bootstraps’ is the origin of both the ‘bootstrap paradox’ and the ‘boot sequence’ in the language of computing.

Your computer loads or ‘boots up’ when turned on because RAM stores data electrically. This is called ‘volatile memory,’ because when the memory banks lose power, they forget everything.

This is why you save as you go.

Firmware is the bridge between software and hardware. It is stored permanently on the motherboard. When the computer turns on, the firmware initializes first. It then searches for the operating system and loads it so the computer can remember how to be a computer.

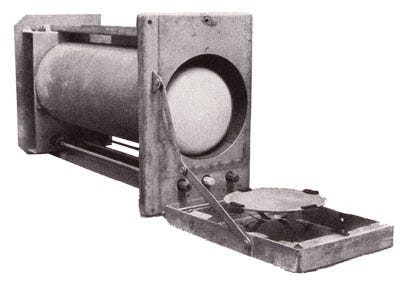

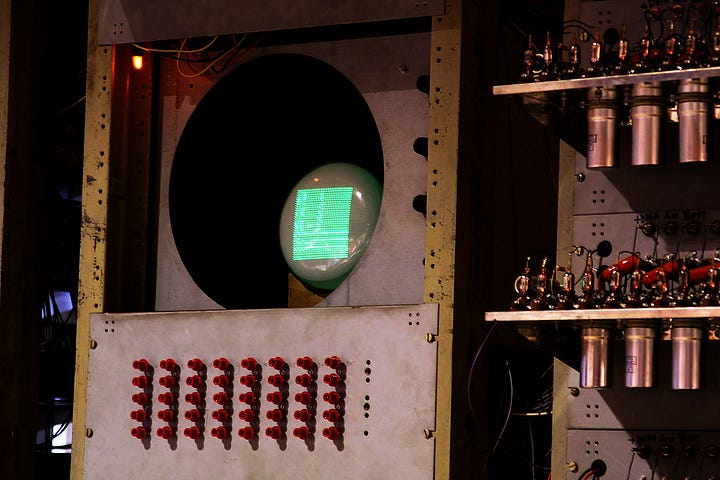

The earliest form of RAM was the Williams-Kilmer tube used in the ‘Manchester Baby’ in 1948. It is a cathode-ray tube or CRT — a kind of vacuum tube. The CRT was first used in displays for scientific instruments, radar systems and televisions.

A CRT display creates images by scanning a beam from an electron gun across a screen coated with phosphors line by line. The excited phosphors fluoresce according to the strength of the beam.

In essence: the prototype of the memory bank was a small internal television which the computer ‘watched.’ Each point in the array was one bit of data. Thus, the machine received its instructions by ‘watching programs’ on a ‘television.’

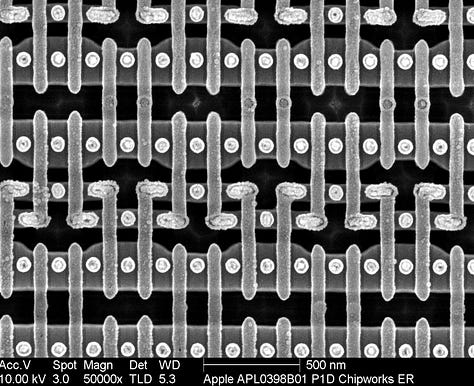

You can imagine how clunky this sort of apparatus would have been. The Manchester Baby had 1 kilobit of RAM and was incredibly inefficient by modern standards. The Baby took 52 minutes to find the highest factor of 218. A modern iPhone can do that before a photon can travel from one end of a ruler to the other.

So how did we get from the lumbering memory modules of SWAC and the Baby to handheld machines that run billions of times faster in under a century?

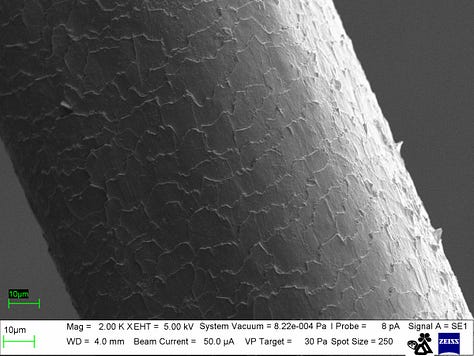

The answer is the transistor, invented in 1947.

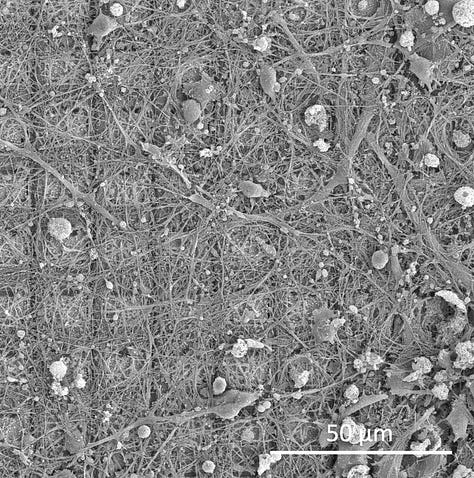

These semiconductor devices are a core building block of integrated circuits like microprocessors and RAM modules. They are solid-state which is why we can build them so small that millions would fit on the end of a hair. Shrink them much smaller than they are now and the electrons start tunneling outside the insulation.

Semiconductors allowed the ‘resolution’ of the electrical images introduced by CRT memory to be vastly enhanced even as the systems themselves got smaller.

This accelerated the hardware, and opened up the bandwidth of RAM so enormously that it became possible to encode complex, interactive images as data structures in digital memory. This data consisted of raster scan instructions which would allow the images to be constructed on a monitor and read by the user in like manner.

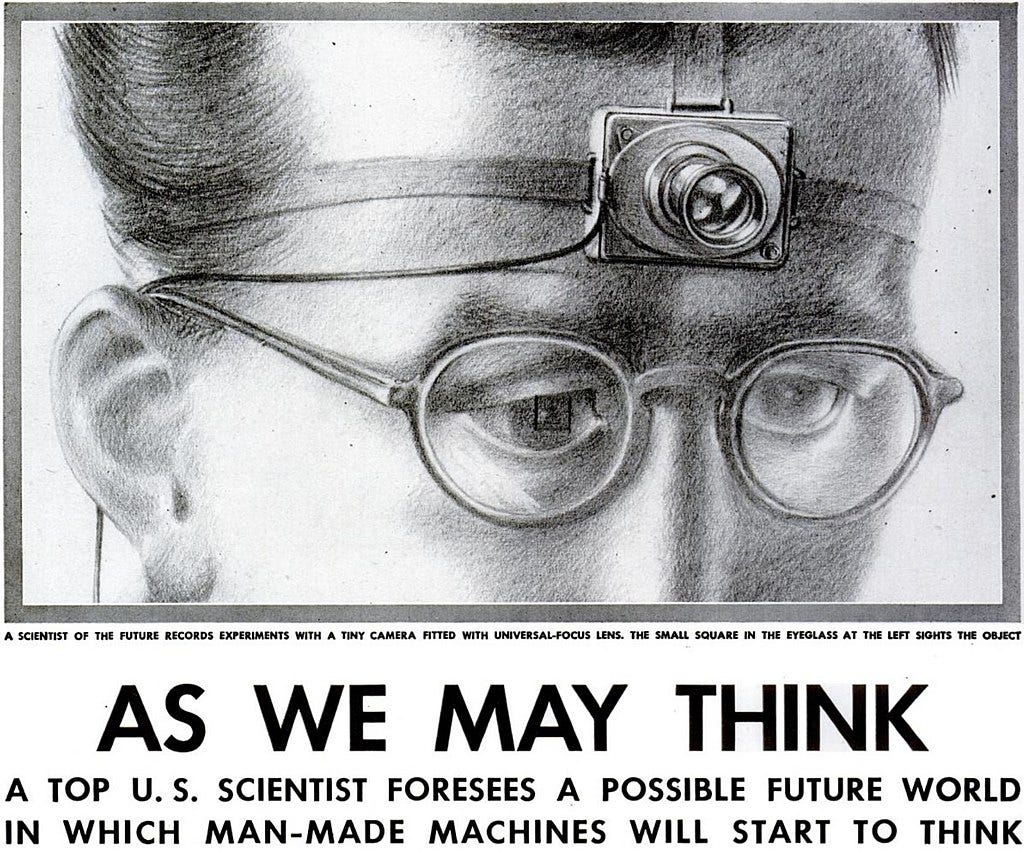

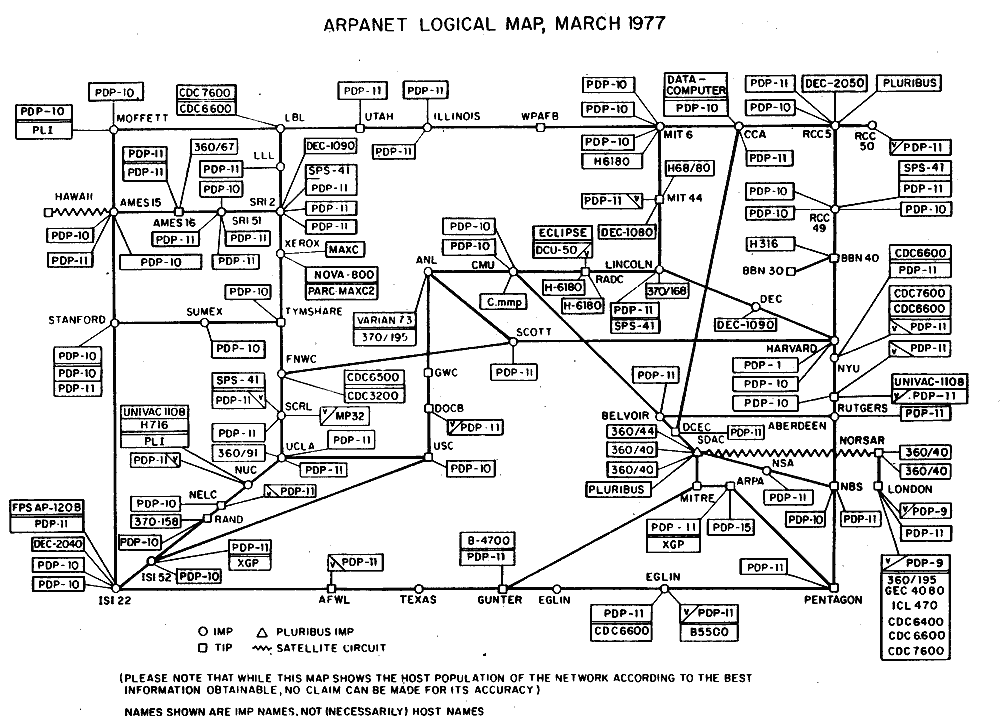

A demonstration of this was first achieved by Douglas Engelbart’s Augmentation of Human Intellect project, funded by ARPA, NASA and the US Air Force. The project’s ultimate objective was “intelligence amplification,” a theoretical process which would enhance human thought by technologically optimizing pattern recognition. This is laid out in Engelbart’s 1962 paper: Augmenting Human Intellect: A Conceptual Framework.

…‘Intelligence amplification’ does not imply any attempt to increase native human intelligence. The term ‘intelligence amplification’ seems applicable to our goal of augmenting the human intellect in that the entity to be produced will exhibit more of what can be called intelligence than an unaided human could; we will have amplified the intelligence of the human by organizing his intellectual capabilities into higher levels of synergistic structuring…

Engelbart was a radio and radar technician in world war two, and well-acquainted with CRT technology by the time of the first computers. It can be argued he beat McLuhan to the punch, understanding that “the medium is the message” two years before the words were penned. By then, he knew this and was already devising ways to apply it.

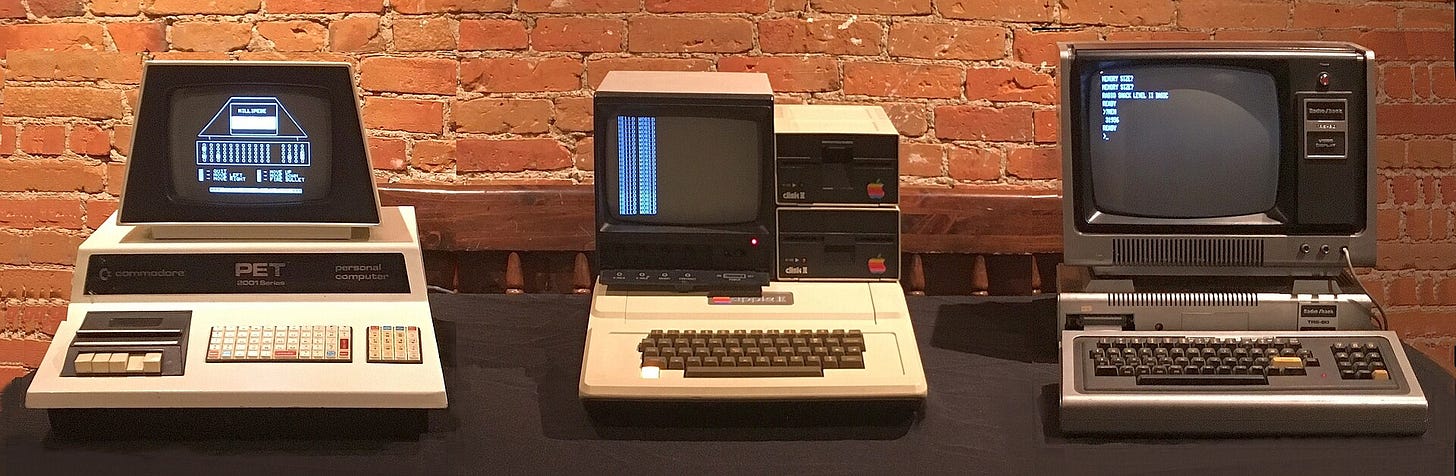

His research program bore NLS. It was the first computer system to incorporate a word processor, hypertext, a mouse, video monitors, windows, collaborative document editing and even video conferencing — all this in 1968.

NLS became DNLS, in use by ARPA by 1973. DNLS inspired Xerox PARC’s Alto system, famous for in turn inspiring the Apple Lisa — the first mass market personal computer with a GUI.

The inventor of the interface conceived it as a kind of initiatory gateway to bring forth a new kind of consciousness. An ‘augmented intellect’ with fewer limitations, able to perceive and solve problems in ways he and those of his time could not.

The Leviathan

The signs of design however are deeper and more pervasive than one man’s mission to enhance the human intellect.

Engelbart was a true visionary, who imagined a future in which thought improves its tools, and the improved tools improve thought — a symbiotic feedback loop he also called ‘bootstrapping.’ However, this is evidently not what happened en-masse, an indication that larger forces with quite different intentions had a hand in shaping these undertakings.

Something happened near the end of the Second World War that set all this in motion. It began with a series of landmark events conspicuously close together.

1942 First Nuclear Reactor.

1943 First Ingestion of LSD.

1944 Colossus Operational. First UFO reports by Allied Airmen.

1945 The Trinity Test. Nag Hammadi Library Discovered. ENIAC Operational.

1947 The Roswell Incident. First “Flying Saucers” Reported. First Wave of American UFO Sightings. The CIA is Formed. The Transistor is Invented.

In this tiny window, the Cold War, intelligence state, information age, counterculture and UFO phenomenon were all initialized. This is no coincidence, neither need we speculate or fall back on flimsy supernaturalism; for the systematic principles behind this strange unfurling were uncovered at the selfsame time.

In 1943, a seminal paper: A Logical Calculus of the Ideas Immanent in Nervous Activity was composed. Its authors, Warren McCulloch and Walter Pitts made the original analogy between neurons and binary logic circuits. These men were the first to conceive of human thought as computation, and by extension, the first to envision a computer that could ‘think’ like us.

Their paper laid out the first model of a neural network and the groundwork for artificial intelligence before the Colossus was even turned on. But it also helped bring forth something far more important: cybernetics.

Cybernetics is the transdisciplinary study of circular causation. It comes from the Greek kubernḗtēs (steersman). ‘Government’ derives from the same word.

The standard example of a cybernetic system is a thermostat. If the ambient temperature around the sensor is too low, the system turns a heater on. When the sensor detects an input that is too high, it turns the heater back off. This ouroboric feedback cycle is a cybernetic loop.

(‘Cyber’ is unrelated to computation etymologically. Rather, the conceptual link arose over time because the computer is the first truly cybernetic technology.)

William Ross Ashby, the pioneering cybernetician who formalized the concept of the ‘black box,’ formulated the principle of self-organization in 1947. He described the way that order can emerge out of chaotic local interactions in initially disordered systems.

The basis of all self-organization is the attractor. Physical systems evolve toward states of equilibrium within basins of attraction. An attractor is a set of equilibrated states which a system tends to approach once it enters the basin of attraction.

(The ‘technological singularity’ is a theoretical attractor whose basin is likened to the gravity well of a black hole.)

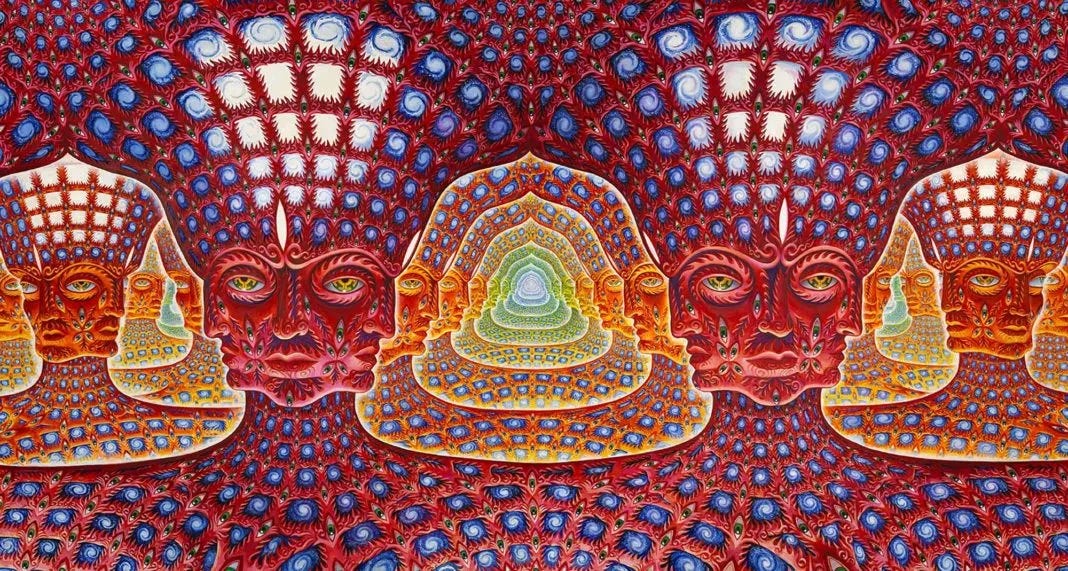

Imagine these metronomes are autonomic nervous systems. What they rest on is cybernetic infrastructure. This simple schematic is the blueprint for a self-organizing, self-regulating SDRAM system that uses humans in place of silicon memory banks.

SDRAM is a system in which independent memory banks are synchronized with an external clock signal. Unlike in earlier asynchronous systems, the synchronization allows SDRAM to perform overlapping operations in parallel. This is called ‘pipelining.’

Subtask A is allotted to a node here, subtask B to a node over there, subtask C to one over yonder. Each is assigned where the system at large locates ideal conditions. Each is a ripple set in motion by a precisely struck, top-down intervention. Finally, all this activity comes together with perfect timing.

The self-structuring of such a neuromechanical intelligence system, synergizing homeostatic and cybernetic controls would be so robust that it would be almost unstoppable once sufficiently active. It would be far smarter than all the people in it, and more powerful than all of its machinery combined.

It could be built and it would work, but only if you could optimize the way the neurochemistry interfaces with the digital architecture. If you can just get the brains and the computers to talk to each other fast enough, a critical threshold is crossed, and it becomes self-sustaining.

The conditions required to initiate the bootstrapping process for such a system could easily arise in the well-oiled, well-ordered war machine of the American military industrial complex at the height of the second world war.

At this point in the story, there are two possibilities:

The system is very close to being realized.

The system is active and in place already and is simply being optimized (my current hypothesis with the caveat that the strength of its influence varies locally).

“Think Different”

I can open my computer and order almost anything online. By interacting with a few patterns while seated here at my desk, I can send causal ripples out into the universe which will result in a meaningful coincidence. That is: a stranger will drive to my house with the exact thing I saw and will place it outside my door.

This is so normal and so easy that when it happens I won’t marvel with wonder at the stars and give thanks to the mysterious beings who accepted my numerical sacrifice. But this would be magic to anyone who didn’t take it for granted.

All magic is stage magic.

Constellation is not magic either. But it’s also quite difficult to define it in a way where the act of ordering a pizza does not meet all of the definitional criteria. Indeed, ordering a pizza is actually closer to what is meant by constellation than what many imagine the word to mean.

When you order a pizza, the local psychophysical environment (your phone does most of the work in this case) configures itself in a way that allows you to perceive things non-locally using a set of signifiers with established meanings. By participating in this symbolic intercourse, you can coordinate with invisible forces and distant movements in a way that culminates in an intelligibly related event:

The exact pizza you envisioned shows up.

The only major difference is that when you place the order for a pizza the causation propagates through technological channels designed to facilitate such transactions. However, many of the more ‘magical’ examples which I have documented are still partially reliant upon this infrastructure. The systems are simply accessed by strange means and made to function unusually.

This is why we cannot define constellation adequately as any particular action, process or method. Any such definition would necessarily include any and all interactions with symbolic interfaces. Constellation, rather, is the application of a more fundamental knowledge of what action is. It is only this meta-perception which transmutes the actions into something greater than a sum or sequence.

“Organizing intellectual capabilities into higher levels of synergistic structuring.“

The person ordering a pizza usually still sees the world as a place of pool shots and domino runs. A book to be read in the order numbered on the pages. An internet transaction is consistent with and does not necessarily disrupt this worldview.

Nonetheless, such systems appear to be a kind of scaffolding or paradigmatic liminal space intended to obliquely suggest a different way of seeing. One in which symbolism is the first language of thought through which causation is accessed and upon which it is seen to supervene.

‘Intended’ by who and for what purpose is unclear. It is reasonable to infer that there are a multitude of answers to that question.

These ‘suggestions’ have emerged at multiple cultural locations concurrently (including this present corpus of work) with no clear evidence of any conscious coordination or coherent agenda at human levels of organization. This would seem to indicate that they are coming from 'above' the system, not inside it.

(The emergent understanding that effects condition their own causes has occurred independently at least thrice.

1. Chris Langan's CTMU (mid 1980s)

2. The Cybernetic Culture Research Unit (1995)

3. The multilocal, roughly synchronous emergence of 'Constellation' (~2020)All three model time cybernetically, however, each emergence has distinct characteristics which is why definitional boundaries must be firmly established and maintained. Divergent and spiritually incompatible future emergences are likely to arise and ought to be anticipated.)

Do not suppose any of this is happening 'because of' the invention of computers.

That kind of thinking is obsolete.

Related Posts:

Reading this at face value reminds me of a schizo post of how circuit boards are like the seals of Solomon, but it's so much deeper than that with actual history and tangible evidence, just remember over half of all of your interactions online are with robots and AI not people, definitely going to give this a second read when I have more time to look into it.

This is a relative of mine (disclosing this is enough of a dox so I won't say exactly how we're related), so this article hits quite close to home:

https://en.wikipedia.org/wiki/Philip_Don_Estridge

He was offered a position as the president of Apple by Steve Jobs himself, which he declined.

Academic psychedelia and techno-artifice runs deep in my family. I'm not sure how far back it goes; my grandmother told me that I am a member of the oldest continually documented family in America, having done the documentary research herself tracing us back to the the first settlers at St. Augustine. I took the name Raphael when I was baptized into the Orthodox Church last week, mainly for his role in the Book of Enoch, but also the Book of Tobit.

But anyways, great article man. I've been watching your work for a while, and I'll catch up on what you've written.

God bless.